In research, experience and linguistic-related inequalities exist [1]. AI has the potential to overcome these challenges by providing linguistic and argumentative improvements. The availability and accessibility of knowledge can be enhanced by the creation of customized knowledge bases.

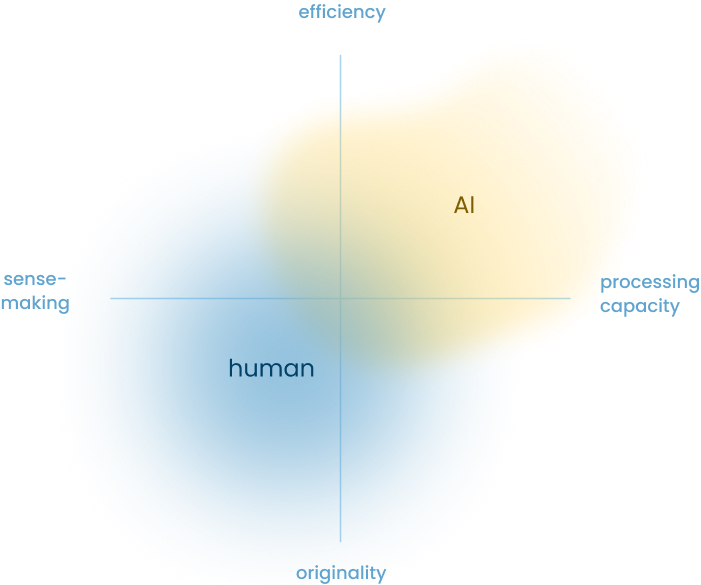

AI use in daily research tasks has experienced a significant upswing [2]. It offers support in experimental design, cleaning data, creating hypotheses, shaping research questions, summarising datasets and suggesting follow-up experiments. Among researchers, however there is a demand for AI tools that act as sparring partners [3][4] as opposed to one-click-solutions.

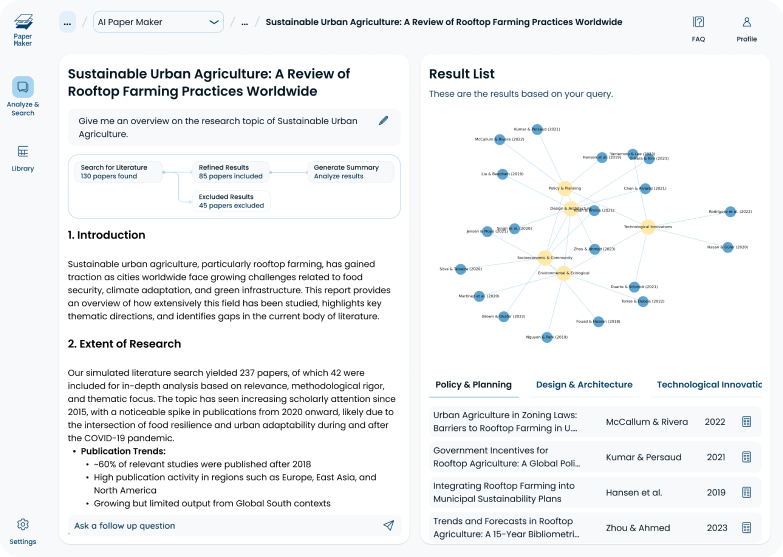

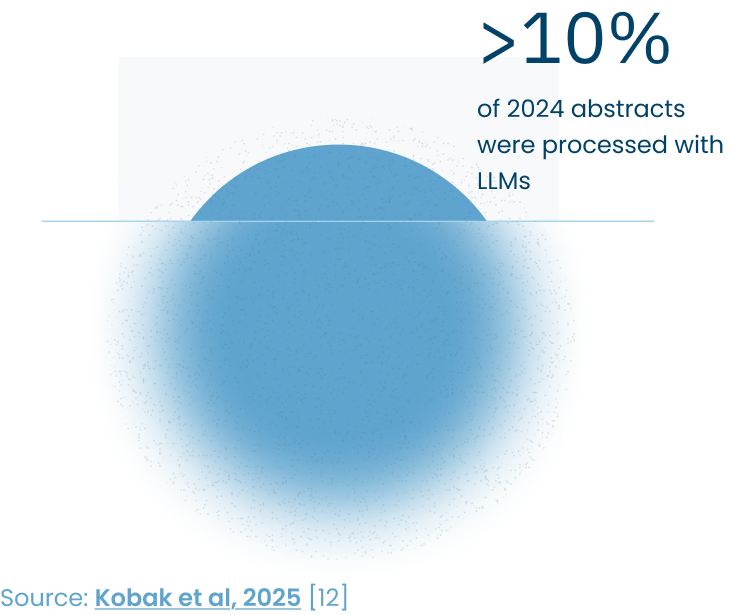

Due to the increased number of publications in various academic fields [5], the pressure to read large bodies of research papers has increased. Modern AI technologies can help processing, filtering and aggregating relevant literature and theby reduce researchers' workload.

AI tools are increasingly used to assist with scientific writing [2]. Editing tools can improve grammar in manuscripts, especially for authors writing in a second language. Citation tools can format references and suggest relevant sources. Lastly, summarization features facilitate reading and understanding academic literature.

In research, experience and linguistic-related inequalities exist [1]. AI has the potential to overcome these challenges by providing linguistic and argumentative improvements. The availability and accessibility of knowledge can be enhanced by the creation of customized knowledge bases.

AI use in daily research tasks has experienced a significant upswing [2]. It offers support in experimental design, cleaning data, creating hypotheses, shaping research questions, summarising datasets and suggesting follow-up experiments. Among researchers, however there is a demand for AI tools that act as sparring partners [3][4] as opposed to one-click-solutions.

Due to the increased number of publications in various academic fields [5], the pressure to read large bodies of research papers has increased. Modern AI technologies can help processing, filtering and aggregating relevant literature and theby reduce researchers' workload.

AI tools are increasingly used to assist with scientific writing [2]. Editing tools can improve grammar in manuscripts, especially for authors writing in a second language. Citation tools can format references and suggest relevant sources. Lastly, summarization features facilitate reading and understanding academic literature.

Established large language models are characterised by a significant lack of transparency with regard to their operational mechanisms and the and the characteristics of training data. This black box nature is at odds with the scientific principle of traceability and verifiability. Irreproducable Statements, ideas or literature references without traceable sources can be harmful if adopted [6].

The integration of AI within research endeavours has the potential to disrupt the conventional concept of authorship. In the event of significant sections of text being generated by an AI system, or hypotheses being proposed, the question of attribution of intellectual property becomes a salient issue. [11]

While the utilisation of AI tools has the potential to reduce researchers' workload and support the creation of new ideas, there is a risk of deskilling [7]. This term refers to the potential loss of skills such as critical thinking due to overreliance on AI. Consequently, novel competencies such as critical evaluation of AI outputs are required. Additionally, skills like editing AI outputs are further expanded (upskilling).

While AI systems are generally regarded as neutral, they are influenced by the biases inherent in the training data. In research, this can result in the systematic favouring or ignoring of certain perspectives. This issue becomes particularly problematic when researchers use AI as a seemingly objective tool without critically considering the inherent bias structures. [8][9]

Large language models have a significant drawback: they are trained on massive web datasets, often containing personalized data such as names, birth dates along with data like intellecutal property [10]. In addition, users might enter personalised data that could potentially be misused. These issues can be tackled by data minimization (using only necessary data for a task) as well as encryption of masking of personalized data.