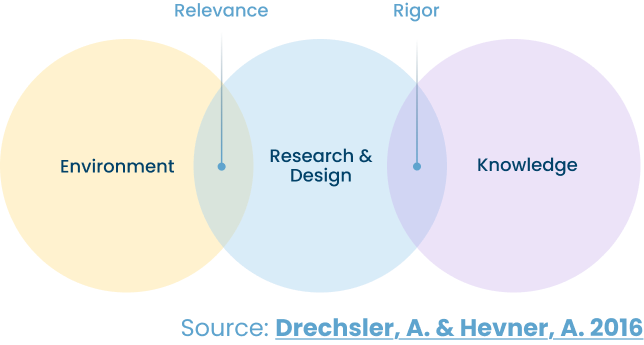

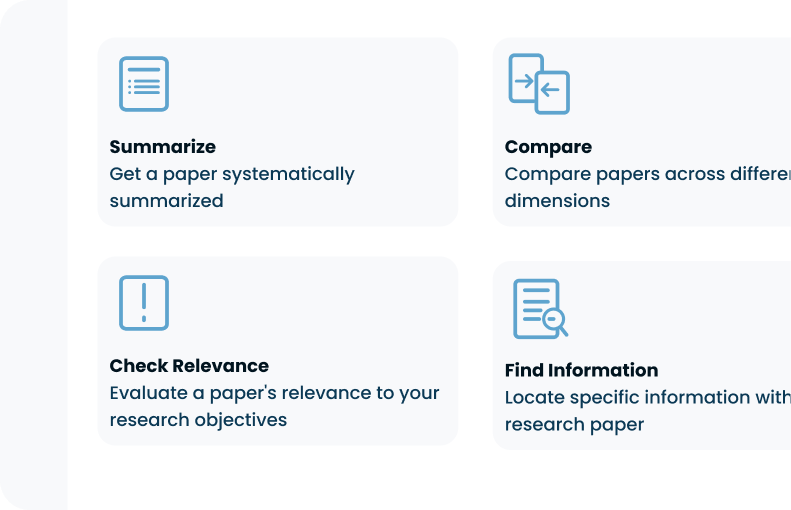

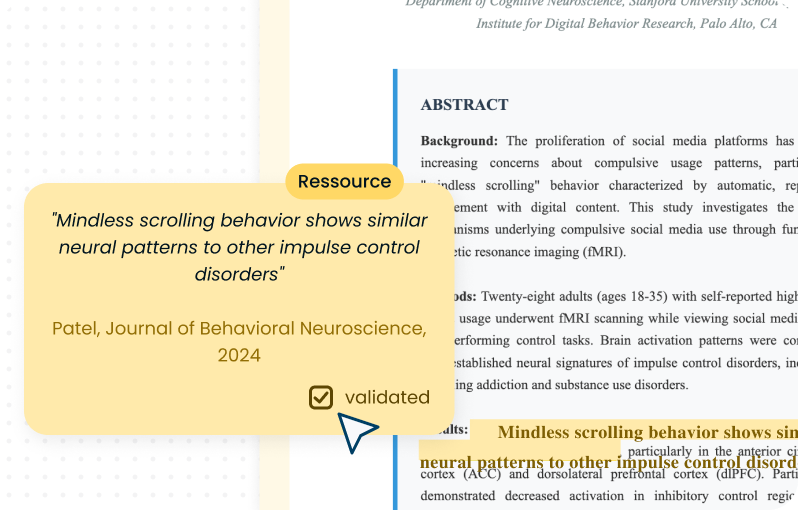

We are developing a framework to meet scientific requirements and protect the integrity of researchers. Referenced, high-quality outputs and fraud prevention measures increase the acceptance of the tool in the scientific community.

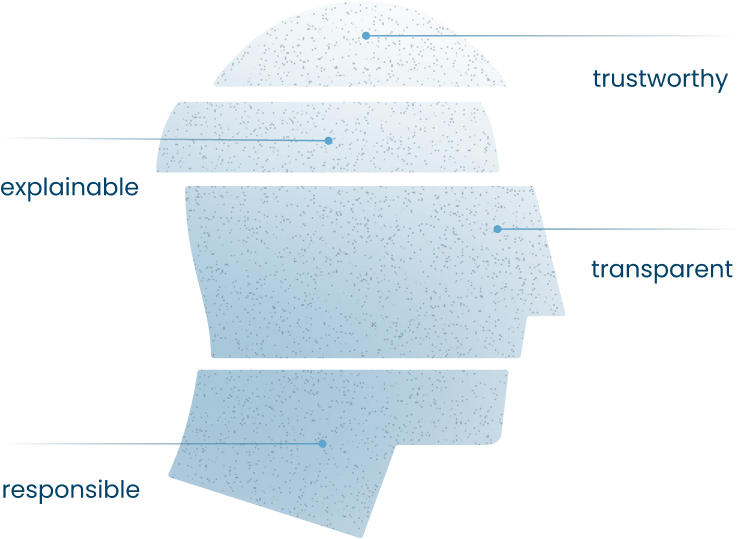

We take responsibility for our solution and ensure that users trust our tool appropriately and thus explain the risks of use, avoid them by design and therefore minimize their appearance.

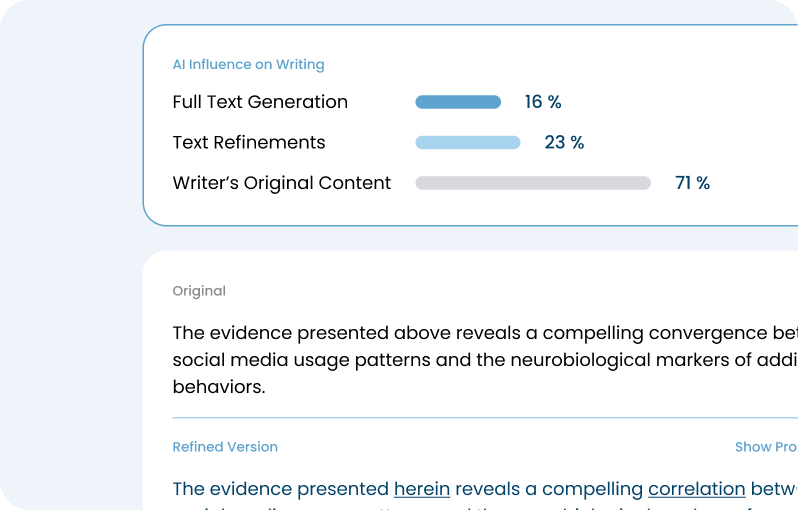

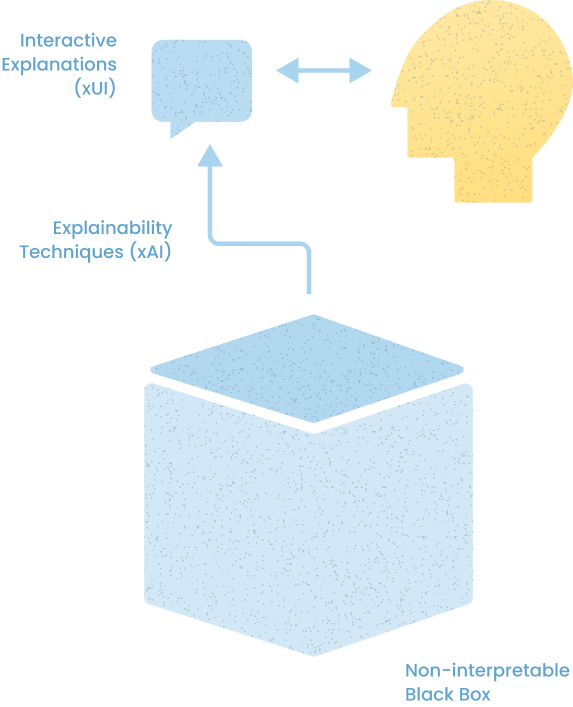

Our approach to human-centred AI (HCAI) has two key aspects. First, we aim to maximise the degree of human control and ensure the user is kept in the loop. Second, product development is based on human-centred design principles.

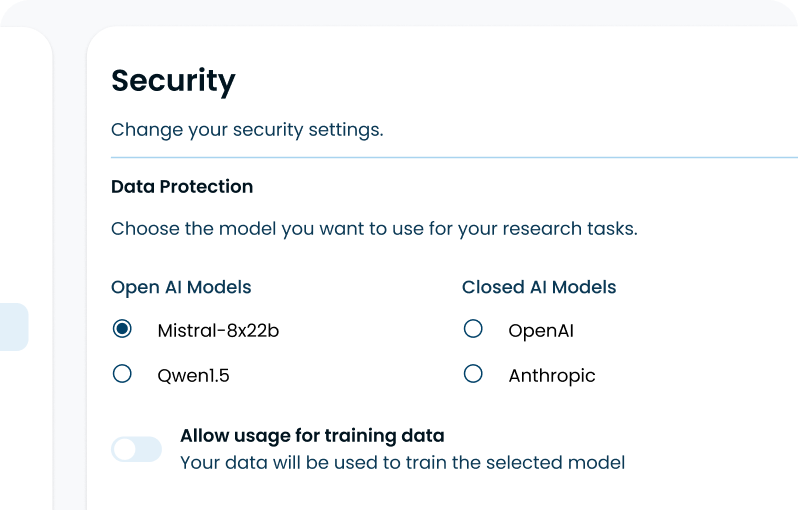

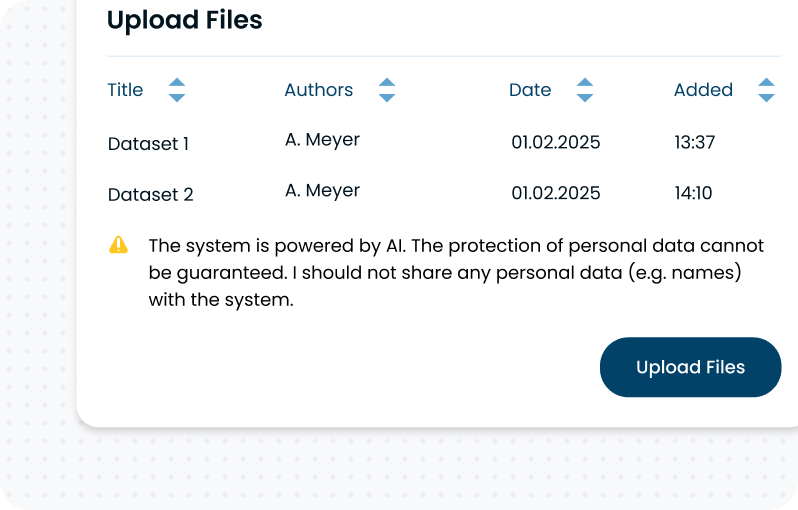

Protecting sensitive scientific data is one of our core principles. This also ensures the novelty value of our users' hypotheses and research results is protected. To this end, we elaborate solutions to incorporate privacy by design.

We are challenging the status quo by developing a software architecture framework that complies with the EU AI Act and GDPR.

Our architecture is based on Zero Trust and Zero Knowledge principles. It uses a task-worker model where stateless workers, including on-premise deployments, execute tasks securely. Secrets are stored encrypted and only decrypted in-memory during execution. All data is handled through S3-compatible storage, whether cloud-based or on-premise. All operations are authenticated and governed by strict policies to ensure isolated and verifiable execution.