.breakdance .bde-div-411-101{background-image:url(https://papermaker.ai/wp-content/uploads/2023/11/pexels-google-deepmind-17483867-2-1024×576.jpg);background-size:100% auto;background-repeat:no-repeat;background-position:center center}

10

Minute Read

What is Explainable Artificial Intelligence (XAI)?

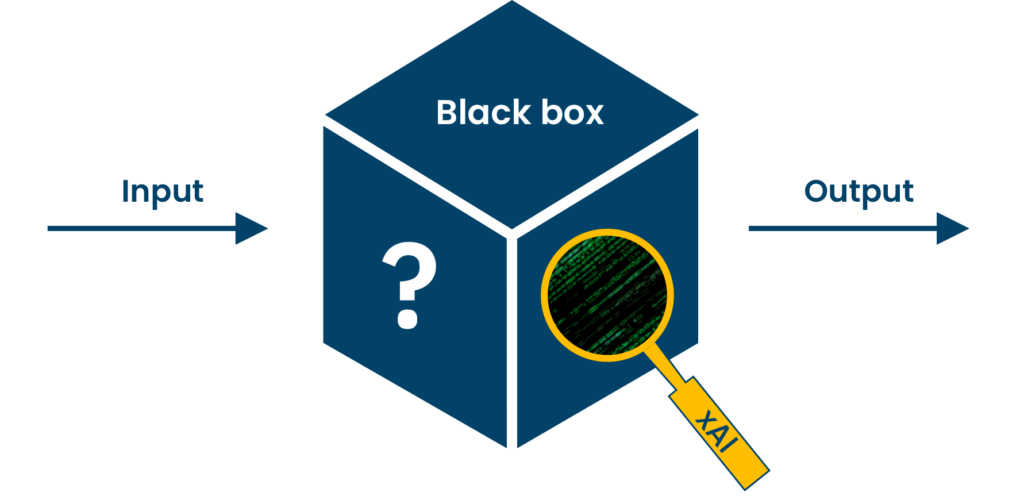

Explainable Artificial Intelligence (XAI) is a recent transformative approach in the field of artificial intelligence, focusing on enhancing the transparency and understandability of Artificial Intelligence (AI) systems for humans [1]. The term “XAI” was formulated in the year 2019 by the Defense Advanced Research Projects Agency (DARPA), which is an US-American authority of the ministry of defense [2]. This booming surge in research and development within XAI is driven by a growing recognition of its critical importance, contrasting with traditional “black box” AI models.

XAI aims to demystify AI decision-making processes by developing techniques and algorithms that provide insights into how and why AI systems reach specific outcomes [3]. By allowing us to peer into the “mind” of AI, XAI aims to foster trust, more effective utilization, and alignment of AI actions with our expectations and values. XAI could therefore effectively bridge the gap between advanced AI technology and human comprehension in the pursuit of responsible and accountable AI applications.

Figure 1: XAI as a solution to the black box problem.

Why XAI Matters – Solving the Mystery of Black Box AI

Ever wondered how AI systems make decisions that sometimes feel like magic? Often, they operate like impenetrable black boxes, leaving users and stakeholders in the dark about the reasoning behind their choices [3]. This opacity is particularly evident in fields like healthcare, where AI-driven diagnoses can have life-altering consequences. In this context, XAI could help professionals by clarifying AI-decision-making, enhance trust and enhancing transparency, trust, and accountability between human users and complex AI models [4]. For example, AI is currently used in diagnostic imaging, helping professionals to detect anomalous patterns in X-rays [5]. In an era where AI plays an increasingly pivotal role in our lives, XAI is not just a technical advancement but a fundamental driver of responsible and ethical AI adoption, ensuring that AI technology aligns with human values and expectations.

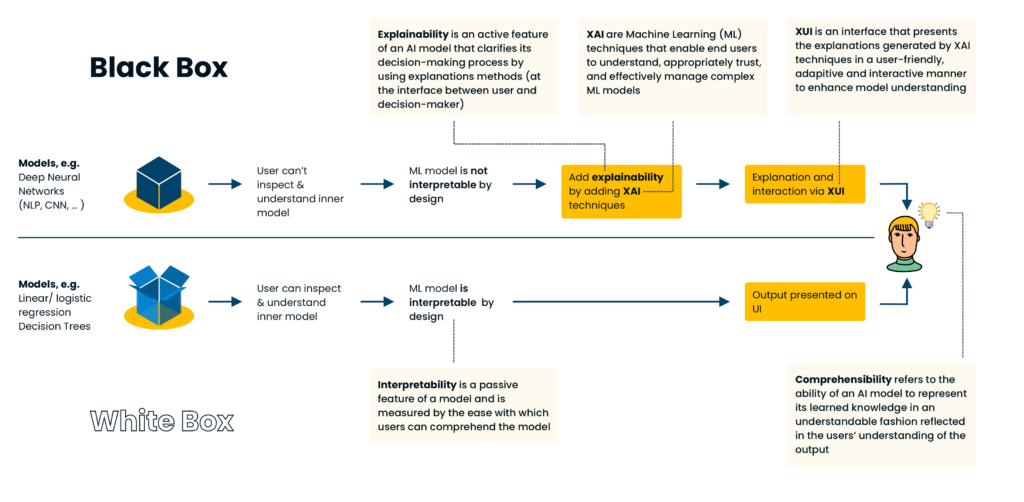

The below graphic shows the difference between white box models which are inherently interpretable and black box models, which need XAI techniques to be explainable. This framework was developed by us, adapting existing definitions of common XAI terminology.

Figure 2: From black box and white box models to comprehensible models. This chart was created by our team based on common XAI terminology in the literature.

What are the Benefits of XAI?

XAI is like a clear window into the AI decision-making process and plays a pivotal role in making the complex world of AI more understandable to humans. At its core, XAI achieves this by offering transparency into the decision-making processes of AI algorithms. It does so through various techniques, including model visualization, feature importance analysis, and generating explanations for AI predictions or recommendations. [1] By employing these methods, XAI sheds light on why AI systems make specific decisions, providing users with insights they can comprehend. This enhanced transparency also benefits non-technical members of organizational teams, bridging the gap between them and the data scientists.

For developers, XAI can serve as a valuable tool for debugging AI models. It provides a deeper understanding of the decision-making process, enabling more effective troubleshooting [6].

What are the Stages of XAI?

In general, explainability can be applied throughout the entire AI development pipeline: before, during and after modeling [7].

In the pre-modeling phase of XAI, data is cleansed, preprocessed, and relevant features are selected to establish the groundwork for transparent and reliable AI models, mitigating biases and enhancing data quality.

In the explainable modeling phase of XAI, the goal is to create AI models that provide accurate predictions while being transparent and interpretable. This is achieved through techniques like using interpretable models, feature engineering, and model-independent explainability methods, enabling humans to understand and scrutinize the AI model’s decision-making process.

In the post-modeling stage of XAI, the emphasis shifts to the evaluation and validation of the AI model’s transparency and interpretability. This phase involves assessing the model’s performance, refining explanations, and ensuring that the AI system continues to meet transparency and fairness standards as it operates in real-world scenarios.

Figure 3: The three stages of XAI.

What are some XAI Techniques?

XAI encompasses a range of techniques and methods aimed at making AI systems more transparent and interpretable.

Local vs. Global Explanations

In the realm of XAI, explanations are often divided into global explanations and local explanations [8]. Global explanations tackle the overarching question of “how does a model work?” They offer a broad view of the model’s decision-making process, often presented through visual charts, mathematical formulae, or model graphs. These explanations aim to provide a holistic, top-down understanding of the model’s behavior. On the other hand, local explanations focus on the “why” behind specific predictions for individual inputs. They are more granular, attributing predictions to certain features of the data or aspects of the model’s algorithm. While global explanations give us a general framework of the model, local explanations delve into the specifics of individual decisions, offering a bottom-up perspective.

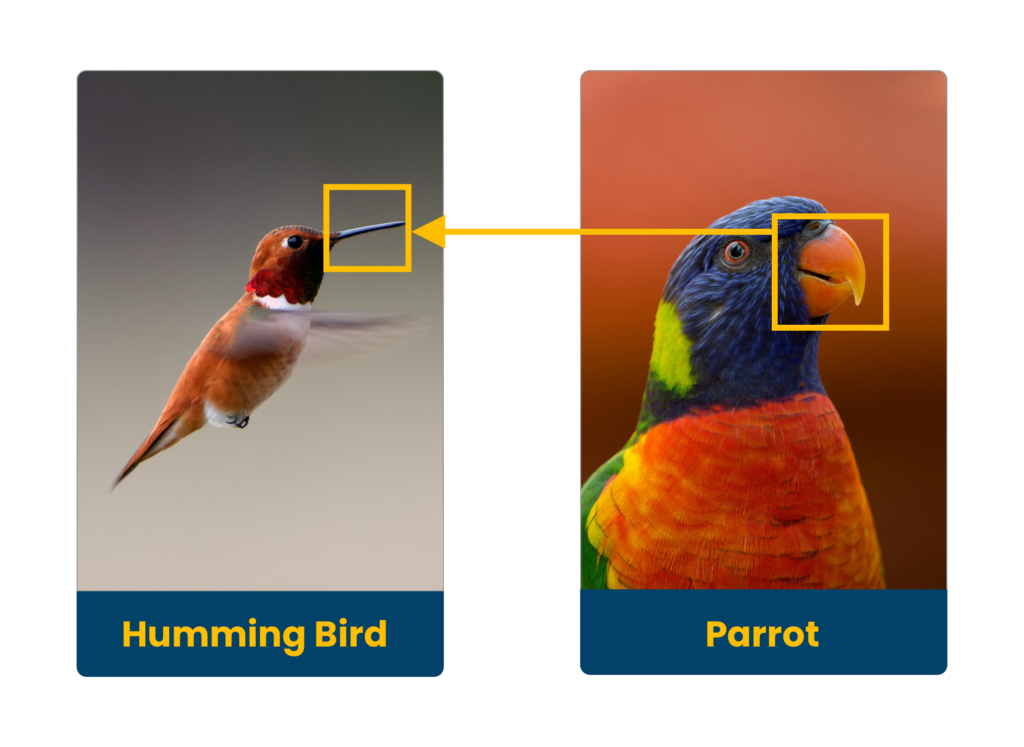

Counterfactual Explanations

Counterfactual explanations provide alternative input scenarios that, if applied, would change the model’s prediction. They aim to help users understand how to achieve desired outcomes [9].

Imagine you applied for a loan, but it was denied by an AI-powered lending system. A counterfactual explanation in this scenario would provide you with an alternative set of actions or conditions that, if met, would have led to your loan being approved. For instance, the explanation might suggest that if your credit score had been 20 points higher, your loan application would have been accepted, giving you a clear actionable insight into improving your chances in the future.

Figure 4: Counterfactual explanations used in computer vision. This graphic was created for illustrative purposes and is based on existing XAI technology found in the literature.

Saliency Maps

Saliency maps can be particularly useful for understanding and interpreting machine learning algorithms. A Saliency Map presents an image highlighting the importance of a pixel i.e. by varying brightness [10].

Figure 5: Saliency maps used in computer vision. This graphic was created for illustrative purposes and is based on existing XAI technology found in the literature.

LIME (Local Interpretable Model-Agnostic Explanations)

LIME is a widely used tool for generating local explanations for machine learning models. Local means that it helps users understand why a specific model made a particular prediction for a given instance. LIME explains machine learning model predictions by perturbing the input data and observing the resulting changes in predictions. Then, it uses these observations to create a simple model around the prediction to highlight which features most influenced the model’s decision [11].

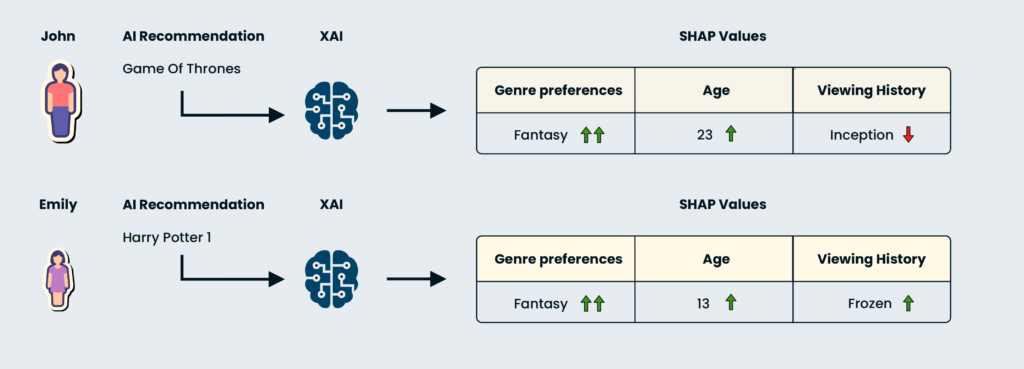

SHAP (SHapley Additive exPlanations)

SHAP is a versatile framework that provides unified explanations for a wide range of machine learning models. It assigns Shapley values to features, based on cooperative game theory and offers a coherent approach to explaining model predictions. Like a player in a game, each feature contributes to the model’s prediction. Just as Shapley values fairly distribute the payoff among players in a game, SHAP assigns a fair value to each feature based on its contribution to the prediction outcome [12].

Suppose you’re trying to understand why a specific movie recommendation was made by a streaming platform’s AI algorithm. SHAP can help by breaking down the contribution of each feature, like genre preferences and viewing history, to the recommendation. For example, SHAP might reveal that the recommendation was primarily influenced by your recent interest in science fiction films, making the suggestion more transparent and insightful.

Figure 6: Prediction of a movie recommendation explained by SHAP. This chart was created for illustrative purposes.

Challenges and Limitations – The Realities of XAI

XAI aims to make complex AI models more transparent and understandable, but it faces several challenges:

Learn More About XAI

If you’re interested in delving deeper into XAI, here are some resources to explore further. These articles, research papers, and tools can help you expand your knowledge of this exciting field.

AI Paper Maker’s Role in XAI

The field of XAI is evolving rapidly with researchers working to make AI even more transparent and trustworthy. With our project, “AI Paper Maker”, we’re committed to advancing XAI. Our primary objective is to delve into the development of an XAI architecture capable of processing data in a transparent manner, prioritizing accuracy as a guiding principle. To achieve this goal, we want to combine promising XAI techniques and validate their effectiveness with potential users.

Through this research, we aim to lay the groundwork for creating a prototype application that will simplify and enhance the scientific paper-writing process.

Read more about the project here

Sources

[1] Das, A., & Rad, P. (2020). Opportunities and challenges in explainable artificial intelligence (xai): A survey. arXiv preprint arXiv:2006.11371.

[2] Gunning, D., & Aha, D. (2019). DARPA’s explainable artificial intelligence (XAI) program. AI magazine, 40(2), 44-58. https://doi.org/10.1609/aimag.v40i2.2850

[3] Minh, D., Wang, H. X., Li, Y. F., & Nguyen, T. N. (2022). Explainable artificial intelligence: a comprehensive review. Artificial Intelligence Review, 1-66. https://doi.org/10.1007/s10462-021-10088-y

[4] Rajabi, E., & Kafaie, S. (2022). Knowledge graphs and explainable ai in healthcare. Information, 13(10), 459. https://doi.org/10.3390/info13100459

[5] V7 Labs. (2022, November 9). AI in Radiology: Pros & Cons, Applications, and 4 Examples. Retrieved November 13, 2023, from https://www.v7labs.com/blog/ai-in-radiology

[6] Sankhe, A. (n.d.). What is explainable AI? 6 benefits of explainable AI. Retrieved November 28, 2023, from https://www.engati.com/blog/explainable-ai

[7] Khalegi, B. (2019, July 31st). The How of Explainable AI: Pre-modelling Explainability. Retrieved November 13, 2023, from https://towardsdatascience.com/the-how-of-explainable-ai-pre-modelling-explainability-699150495fe4

[8] Kamath, U. & Liu, J. (2021). Explainable Artificial Intelligence: An Introduction to Interpretable Machine learning. In Springer eBooks. https://doi.org/10.1007/978-3-030-83356-5

[9] Goyal, Y., Wu, Z., Ernst, J., Batra, D., Parikh, D., & Lee, S. (2019, May). Counterfactual visual explanations. In International Conference on Machine Learning (pp. 2376-2384). PMLR

[10] Li, X. H., Shi, Y., Li, H., Bai, W., Cao, C. C., & Chen, L. (2021, August). An experimental study of quantitative evaluations on saliency methods. In Proceedings of the 27th ACM sigkdd conference on knowledge discovery & data mining (pp. 3200-3208). https://doi.org/10.1145/3447548.3467148

[11] Ribeiro, M. T., Singh, S., & Guestrin, C. (2016, August). ” Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (pp. 1135-1144).

[12] Lundberg, S. M., & Lee, S. I. (2017). A unified approach to interpreting model predictions. Advances in neural information processing systems, 30.

[13] Deloitte UK. (n.d.). A review of Explainable AI concepts, techniques, and challenges. Deloitte UK. Retrieved November 13, 2023, from https://www2.deloitte.com/uk/en/pages/deloitte-analytics/articles/a-review-of-explainable-ai-concepts-techniques-and-challenges.html

[14] Baniecki, H., & Biecek, P. (2023). Adversarial Attacks and Defenses in Explainable Artificial Intelligence: A Survey. arXiv preprint arXiv:2306.06123. https://doi.org/10.48550/arXiv.2306.06123

[15] Chuang, Y. N., Wang, G., Yang, F., Liu, Z., Cai, X., Du, M., & Hu, X. (2023). Efficient xai techniques: A taxonomic survey. arXiv preprint arXiv:2302.03225. https://doi.org/10.48550/arXiv.2302.03225

[16] European Commission. (2021, April 21). Proposal for a Regulation of the european parliament and of the council laying down harmonised rules on artificial intelligence (artificial intelligence act) and amending certain union legislative acts. Retrieved Novemer 14, 2023, from https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52021PC0206