In general, explainability can be applied throughout the entire AI development pipeline: before, during and after modeling [7].

In the pre-modeling phase of XAI, data is cleansed, preprocessed, and relevant features are selected to establish the groundwork for transparent and reliable AI models, mitigating biases and enhancing data quality.

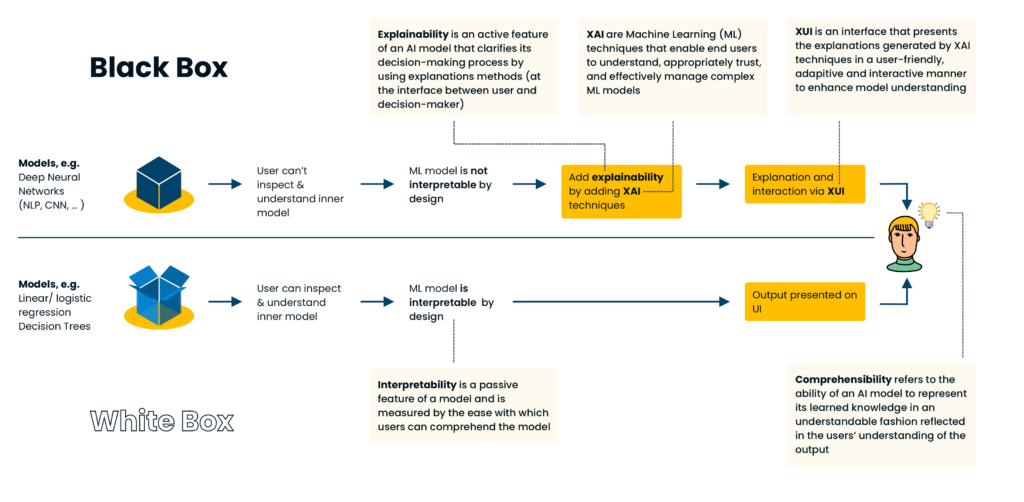

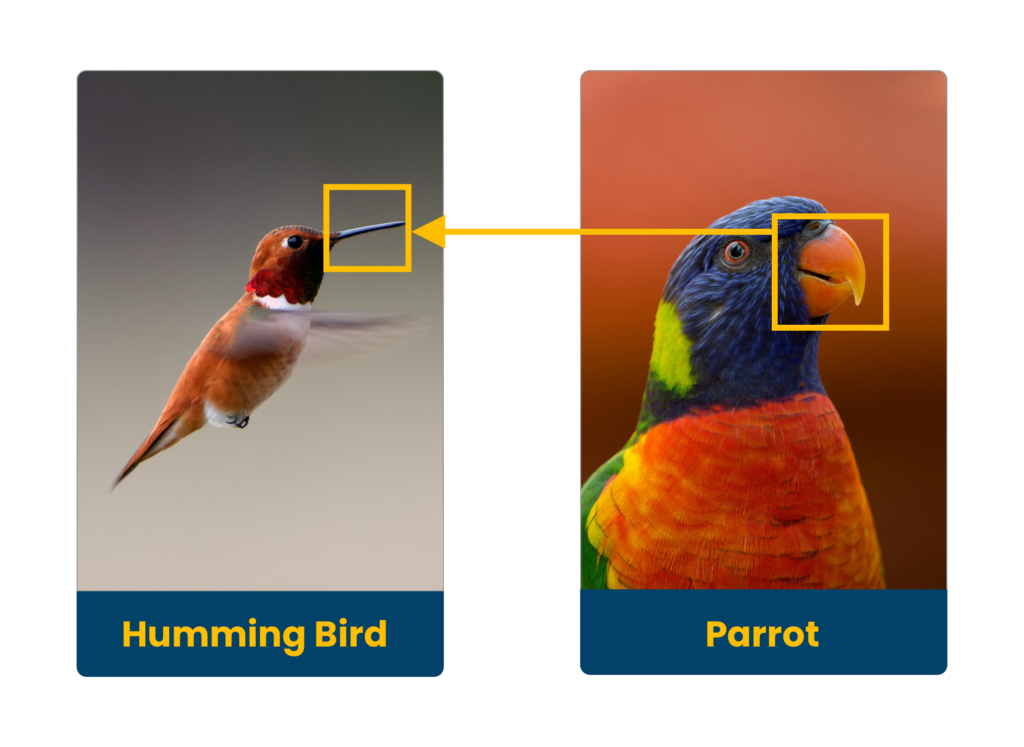

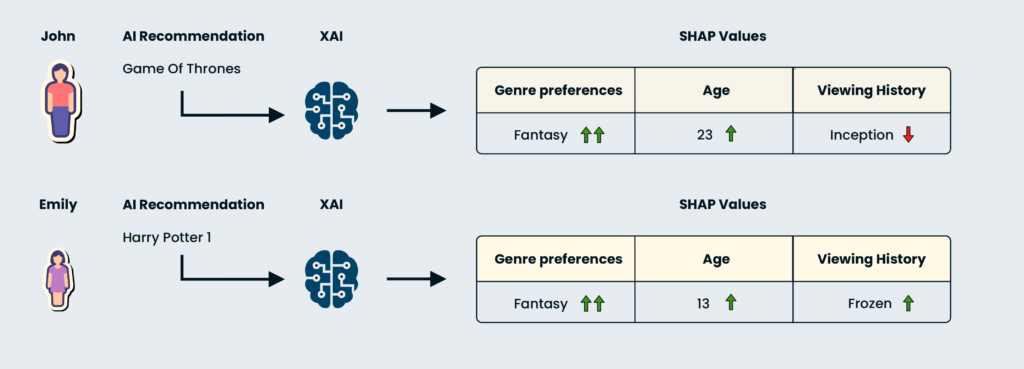

In the explainable modeling phase of XAI, the goal is to create AI models that provide accurate predictions while being transparent and interpretable. This is achieved through techniques like using interpretable models, feature engineering, and model-independent explainability methods, enabling humans to understand and scrutinize the AI model's decision-making process.

In the post-modeling stage of XAI, the emphasis shifts to the evaluation and validation of the AI model's transparency and interpretability. This phase involves assessing the model's performance, refining explanations, and ensuring that the AI system continues to meet transparency and fairness standards as it operates in real-world scenarios.

XAI aims to make complex AI models more transparent and understandable, but it faces several challenges:

Balancing Performance with Transparency: A key challenge in XAI is balancing model performance with transparency. XAI often involves simplifying complex AI models for better understandability, potentially reducing predictive accuracy. This trade-off between maintaining high performance and ensuring interpretability is significant [13].

Security Concerns and Adversarial Attacks: XAI's focus on making AI decisions clear can open new vulnerabilities, especially to adversarial attacks. These attacks use misleading data to manipulate the model's output, exploiting XAI's transparency. This raises serious concerns about the reliability and robustness of AI systems and questions their security and trustworthiness [14].

Computational Intensiveness: Traditional XAI methods often suffer from high computational complexity, which can hinder their deployment in real-time systems. This computational demand limits the practicality of XAI, especially in scenarios requiring rapid decision-making. Overcoming this challenge involves balancing the trade-off between the efficiency and fidelity of explanations [15].

Ethical Considerations (EU AI act): The proposed EU AI act plays a pivotal role in regulating AI. This act concerns AI across various sectors and emphasizes the categorization of AI applications regarding their risk potential. It introduces strict norms against harmful AI practices and requires rigorous assessments for high-risk systems. This regulation aligns with the global push towards ethical AI, mandating organizations to ensure their AI models comply with stringent data privacy and ethical standards [16]. As AI systems are evolving rapidly, this act is crucial to ensure a responsible and transparent AI utilization.

If you're interested in delving deeper into XAI, here are some resources to explore further. These articles, research papers, and tools can help you expand your knowledge of this exciting field.

The field of XAI is evolving rapidly with researchers working to make AI even more transparent and trustworthy. With our project, “AI Paper Maker”, we're committed to advancing XAI. Our primary objective is to delve into the development of an XAI architecture capable of processing data in a transparent manner, prioritizing accuracy as a guiding principle. To achieve this goal, we want to combine promising XAI techniques and validate their effectiveness with potential users.

Through this research, we aim to lay the groundwork for creating a prototype application that will simplify and enhance the scientific paper-writing process.

[1] Das, A., & Rad, P. (2020). Opportunities and challenges in explainable artificial intelligence (xai): A survey. arXiv preprint arXiv:2006.11371.

[2] Gunning, D., & Aha, D. (2019). DARPA’s explainable artificial intelligence (XAI) program. AI magazine, 40(2), 44-58. https://doi.org/10.1609/aimag.v40i2.2850

[3] Minh, D., Wang, H. X., Li, Y. F., & Nguyen, T. N. (2022). Explainable artificial intelligence: a comprehensive review. Artificial Intelligence Review, 1-66. https://doi.org/10.1007/s10462-021-10088-y

[4] Rajabi, E., & Kafaie, S. (2022). Knowledge graphs and explainable ai in healthcare. Information, 13(10), 459. https://doi.org/10.3390/info13100459

[5] V7 Labs. (2022, November 9). AI in Radiology: Pros & Cons, Applications, and 4 Examples. Retrieved November 13, 2023, from https://www.v7labs.com/blog/ai-in-radiology

[6] Sankhe, A. (n.d.). What is explainable AI? 6 benefits of explainable AI. Retrieved November 28, 2023, from https://www.engati.com/blog/explainable-ai

[7] Khalegi, B. (2019, July 31st). The How of Explainable AI: Pre-modelling Explainability. Retrieved November 13, 2023, from https://towardsdatascience.com/the-how-of-explainable-ai-pre-modelling-explainability-699150495fe4

[8] Kamath, U. & Liu, J. (2021). Explainable Artificial Intelligence: An Introduction to Interpretable Machine learning. In Springer eBooks. https://doi.org/10.1007/978-3-030-83356-5

[9] Goyal, Y., Wu, Z., Ernst, J., Batra, D., Parikh, D., & Lee, S. (2019, May). Counterfactual visual explanations. In International Conference on Machine Learning (pp. 2376-2384). PMLR

[10] Li, X. H., Shi, Y., Li, H., Bai, W., Cao, C. C., & Chen, L. (2021, August). An experimental study of quantitative evaluations on saliency methods. In Proceedings of the 27th ACM sigkdd conference on knowledge discovery & data mining (pp. 3200-3208). https://doi.org/10.1145/3447548.3467148

[11] Ribeiro, M. T., Singh, S., & Guestrin, C. (2016, August). " Why should i trust you?" Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (pp. 1135-1144).

[12] Lundberg, S. M., & Lee, S. I. (2017). A unified approach to interpreting model predictions. Advances in neural information processing systems, 30.

[13] Deloitte UK. (n.d.). A review of Explainable AI concepts, techniques, and challenges. Deloitte UK. Retrieved November 13, 2023, from https://www2.deloitte.com/uk/en/pages/deloitte-analytics/articles/a-review-of-explainable-ai-concepts-techniques-and-challenges.html

[14] Baniecki, H., & Biecek, P. (2023). Adversarial Attacks and Defenses in Explainable Artificial Intelligence: A Survey. arXiv preprint arXiv:2306.06123. https://doi.org/10.48550/arXiv.2306.06123

[15] Chuang, Y. N., Wang, G., Yang, F., Liu, Z., Cai, X., Du, M., & Hu, X. (2023). Efficient xai techniques: A taxonomic survey. arXiv preprint arXiv:2302.03225. https://doi.org/10.48550/arXiv.2302.03225

[16] European Commission. (2021, April 21). Proposal for a Regulation of the european parliament and of the council laying down harmonised rules on artificial intelligence (artificial intelligence act) and amending certain union legislative acts. Retrieved Novemer 14, 2023, from https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52021PC0206